Entropy is a concept that plays a significant role in various fields, including physics, information theory, and chemistry. It is a measure of the disorder or randomness in a system, and understanding the difference between high entropy vs low entropy is crucial in comprehending the behavior and characteristics of different systems.

In this article, I will try to give you a thorough understanding of high entropy vs low entropy systems. Whether you are a physics enthusiast, a chemistry student, or simply curious about entropy, this guide will help you understand what high entropy vs low entropy means.

Continue to read more if you just can’t figure out what is the difference between high entropy vs low entropy.

Introduction to Entropy

Okay, first things first before we dive into explaining high entropy vs low entropy. What is entropy? Entropy originates from thermodynamics but has since found applications in various fields, including information theory and chemistry.

If you want to know the difference between high and low entropy and what they mean, you first need to know the basics. That is, knowing what entropy is.

What is Entropy?

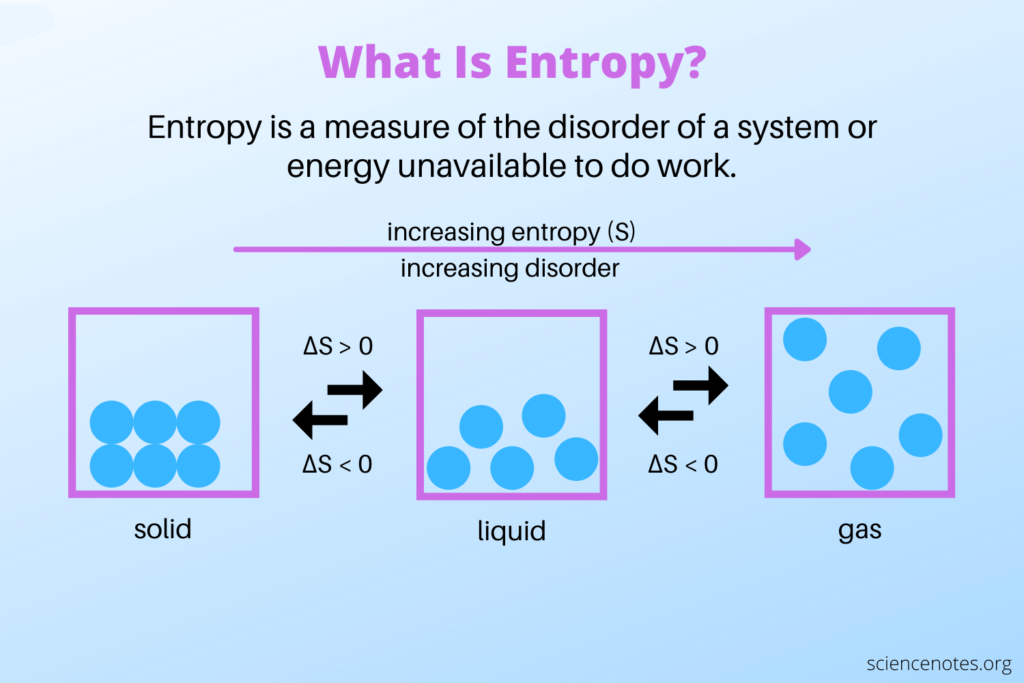

We generally define entropy as a measure of disorder or sometimes as randomness in any given system system. It quantifies the number of possible arrangements or states in a system. In simpler terms, entropy represents a system’s chaos or unpredictability level. This means that the level of entropy in any given system, which is also present in our universe, defines how chaotic or ordered a system is.

The Second Law of Thermodynamics

Entropy has a close connection to the Second Law of Thermodynamics, which states that the total entropy of an isolated system will always increase over time. This law implies that natural processes tend to move towards a state of higher disorder.

Entropy in Information Theory

In information theory, entropy represents the average amount of information in a message or data source. It measures the uncertainty or randomness in the information. The higher the entropy, the more unpredictable the data.

Entropy in Chemistry

Entropy plays a crucial role in understanding reactions and phase transitions in chemistry. It is related to the number of microstates available to a system. It determines the spontaneity and direction of chemical reactions.

Entropy and Probability

Entropy has a close relationship to the concept of probability. A system with a higher number of possible states or arrangements has a higher entropy and, therefore, a higher probability of being in a certain state.

Entropy as a Measure of Disorder

We may define entropy as a measure of disorder, but it is essential to understand that it does not imply chaos in the traditional sense. Instead, it represents the number of possible arrangements or states within a system.

High Entropy. Characteristics and Examples

High entropy’s have a high level of disorder, randomness, and unpredictability. In this section, we will delve deeper into the characteristics of high entropy and provide real-life examples to illustrate its prevalence in various contexts.

What is High Entropy?

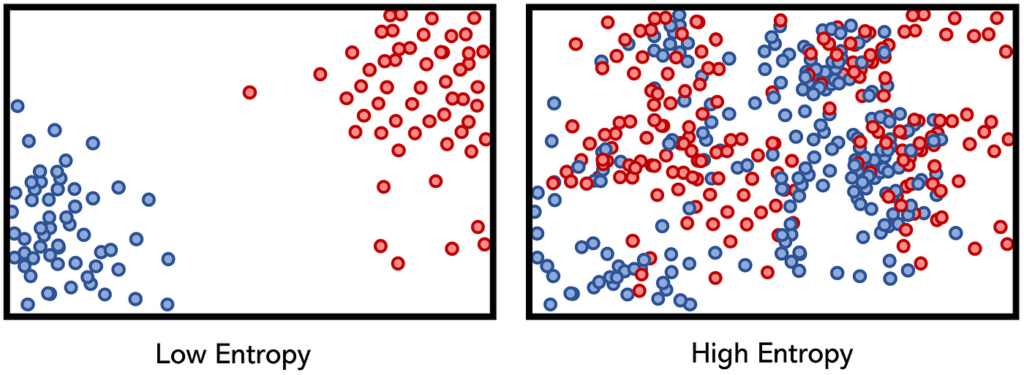

High entropy refers to the state of a system with numerous possible arrangements or configurations. It signifies a high degree of disorder and randomness within the system. In high entropy systems, the elements or components are less organized, and their arrangement is more chaotic.

Properties of High Entropy Systems

- Disordered Arrangements. In disordered arrangements, the components or elements are everywhere, they find their place randomly. There is no specific pattern or organization in the arrangement.

- Increased Energy Distribution. In high entropy, the energy is spread out among the various components, leading to a state of equilibrium. High entropy systems tend to have a more even distribution of energy.

- Increased Complexity. High entropy systems often exhibit higher complexity due to the multiple possibilities and arrangements. This complexity arises from the sheer number of potential states the system can be in.

Real-life Examples of High Entropy

- Gas Molecules. A gas is an excellent example of a high entropy system. The gas molecules move freely and randomly in all directions, with no specific pattern or organization. The arrangement of gas molecules is highly disordered and unpredictable.

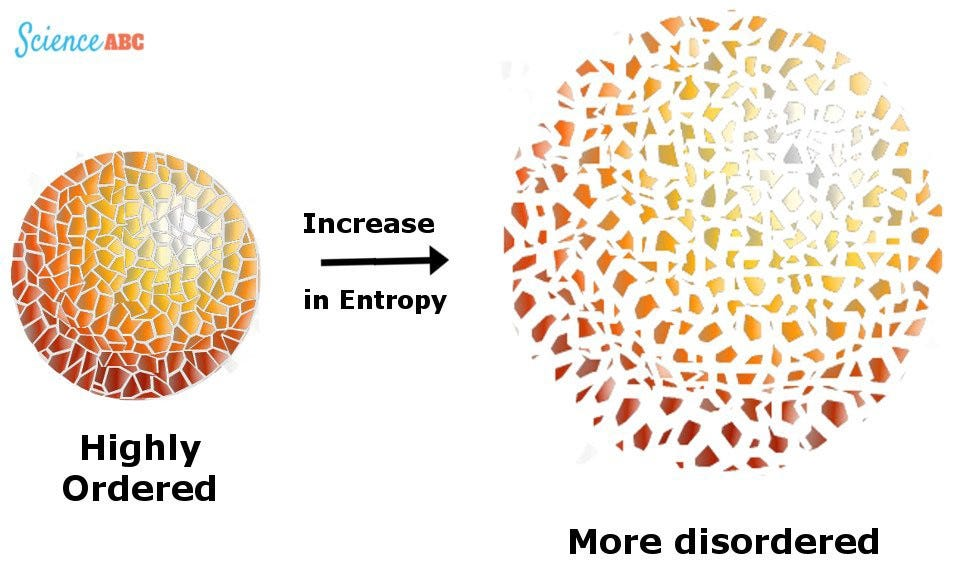

- Shattered Glass. When a glass shatters, it breaks into numerous fragments that scatter in all directions. The shattered glass exhibits high entropy as the pieces are randomly dispersed and lack any specific order.

- Mixed Sand and Pebbles. If you pour a mixture of sand and pebbles into a container and shake it, the resulting arrangement will have high entropy. The sand and pebbles will mix and distribute randomly, creating a disordered system.

- Scrambled Eggs. Scrambled eggs represent a high entropy system as the egg whites and yolks mix together randomly when whisked. The resulting mixture lacks any specific arrangement or organization.

- Weather Systems. Weather patterns are highly complex and exhibit high entropy. The interactions of various atmospheric factors, such as temperature, pressure, and humidity, create a state of disorder and unpredictability.

Low Entropy. Characteristics and Examples

Low entropy systems are characterized by a high level of order, organization, and predictability. This means that the lower the entropy, the higher the order.

What is Low Entropy?

Low entropy refers to a state of a system where there is a high degree of order and organization. In low entropy systems, the elements or components are arranged in a specific pattern or structure, leading to a predictable and less chaotic state.

Properties of Low Entropy Systems

- Ordered Arrangements. Low entropy systems exhibit ordered arrangements, where the components or elements are structured and organized. There is a specific pattern or organization in the arrangement.

- Energy Concentration. In low entropy systems, energy tends to be concentrated or localized within specific components or regions. The distribution of energy is not evenly spread out, leading to a state of imbalance.

- Reduced Complexity. Low entropy systems often have reduced complexity due to the limited number of possibilities and arrangements. The simplicity arises from the specific and organized state of the system.

Real-life Examples of Low Entropy

- Crystal Structures. Crystals are excellent examples of low entropy systems. The atoms or molecules in a crystal lattice have a highly ordered and repetitive pattern. The structure exhibits a specific arrangement and organization.

- Geometric Shapes. Geometric shapes, like squares, circles, and triangles, represent low entropy systems. These shapes have a specific structure and arrangement defined by their mathematical properties.

- DNA Molecules. DNA molecules possess a highly ordered and specific structure. The base pairs have a double helix pattern, representing a low entropy system. The arrangement of DNA bases carries genetic information.

- Crystalline Solids. Crystalline solids, such as salt or sugar crystals, exhibit a low entropy state. The atoms or molecules in these solids have a regular, repeating pattern, resulting in a highly ordered structure.

- Music Notation. Musical compositions and their notations represent low entropy systems. The arrangement of musical notes follows specific rules and patterns, leading to a structured and organized piece of music.

The Impact of Entropy in Different Fields

Entropy plays a significant role in various fields, including physics, information theory, and chemistry.

Entropy in Physics and Thermodynamics

In physics and thermodynamics, entropy is very close with the Second Law of Thermodynamics. This law states that the total entropy of an isolated system will always increase over time. The implications of entropy in physics and thermodynamics include.

- Energy Transformations. With entropy, we can explain the direction and efficiency of energy transformations. Energy tends to flow from high-energy states to low-energy states, increasing the overall entropy of a system.

- Heat Transfer. Heat naturally flows from hotter regions to cooler regions, increasing the entropy of the system.

- Irreversibility. As entropy increases, the ability to reverse the process and restore the system to its initial state becomes increasingly unlikely.

Entropy in Information Theory

In information theory, entropy is the average amount of information or uncertainty contained in a message or data source. It is calculated based on the probabilities of different outcomes.

- Data Compression. We use entropy to determine the optimal compression techniques for data storage and transmission. Higher entropy implies higher randomness and, therefore, less compressibility.

- Error Detection and Correction. Entropy helps in designing error detection and correction codes. By understanding the entropy of a data source, efficient error detection and correction algorithms can be developed.

- Data Encryption. High entropy indicates higher randomness, making encrypted data more secure and difficult to decipher.

Entropy in Chemistry

In chemistry, entropy is related to the number of possible microstates available to a system. It influences the spontaneity and direction of chemical reactions and phase transitions. The implications of entropy in chemistry include.

- Reaction Spontaneity. Entropy change (ΔS) is a factor in determining the spontaneity of a chemical reaction. If ΔS is positive, indicating an increase in entropy, the reaction is more likely to be spontaneous.

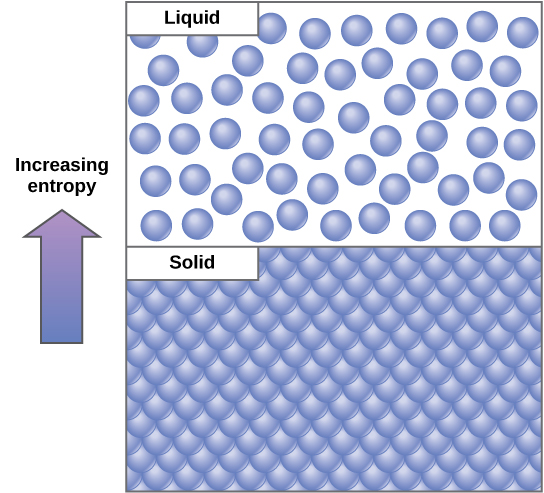

- Phase Transitions. Entropy is involved in phase transitions, such as the conversion between solid, liquid, and gas states. The transition from a more ordered state to a more disordered state corresponds to an increase in entropy.

- Equilibrium. Entropy plays a role in understanding chemical equilibria. The equilibrium position depends on the balance between enthalpy (ΔH) and entropy (ΔS) changes.

High Entropy vs Low Entropy: Comparative Analysis

Now that we have gone through high entropy and low entropy one by one and understood them, we can make the comparison. As you might have already seen, they are two very opposite things and have very different consequences in every environment.

Comparison of High and Low Entropy Systems

- Degree of Disorder. High entropy systems exhibit a high level of disorder and randomness, whereas low entropy systems display a high degree of order and organization.

- Arrangements. In high entropy systems, the elements or components are randomly distributed with no specific pattern, while low entropy systems have structured and organized arrangements.

- Energy Distribution. High entropy systems tend to have a more even distribution of energy, while low entropy systems have energy concentrated or localized within specific components or regions.

- Complexity. High entropy systems are often more complex due to the numerous possibilities and arrangements, while low entropy systems are relatively simpler due to their specific and organized state.

Where High or Low Entropy is Preferred

- Energy Conversion. High entropy systems are preferred in energy conversion processes, such as heat transfer and power generation. The flow of energy from high-energy states to low-energy states is facilitated by high entropy.

- Information Storage and Transmission. In information theory, low entropy systems are preferred for efficient data storage and transmission. Low entropy ensures better compression and error detection/correction capabilities.

- Chemical Reactions. The spontaneity of chemical reactions is influenced by entropy. Reactions with positive entropy change (ΔS) are more likely to occur spontaneously, favoring high entropy systems.

- Stability and Order. Low entropy systems are associated with stability and order. Crystalline solids and well-organized structures tend to have low entropy, providing stability and predictability.

Understanding Entropy Transition

Systems can undergo transitions from high entropy to low entropy or vice versa. These transitions can occur due to external influences or natural processes. Understanding entropy transition is crucial in various fields, including phase transitions, information processing, and system optimization.

By comparing high entropy and low entropy systems, we can have a deeper understanding of the dynamics and behaviors of different systems in various fields. From energy conversion to information storage and chemical reactions, the concept of entropy plays a vital role in determining the characteristics and applications of these systems.

Conclusion

In conclusion, the exploration of “High Entropy vs Low Entropy” unravels the fundamental principles governing the behavior of diverse systems, spanning physics, information theory, and chemistry. The distinction between high entropy and low entropy, characterized by disorder and order, chaos and predictability, holds paramount significance across a spectrum of real-world scenarios.

Whether contemplating energy conversions, information storage, or chemical reactions, recognizing the role of entropy is crucial. High entropy systems, marked by randomness and complexity, find favor in processes like energy conversion.

In contrast, low entropy systems, synonymous with order and stability, contribute to the structured realms of crystalline solids and well-organized information storage. This nuanced understanding equips individuals across scientific disciplines to navigate the intricate dynamics of systems transitioning between states of entropy, fostering advancements in technology, science, and innovation.

FAQ

What does a high entropy mean?

High entropy means a high level of disorder, randomness, or uncertainty in a system. It suggests that the information or energy within the system is more dispersed and less predictable. In information theory, high entropy implies greater complexity or randomness in data, while in thermodynamics, it signifies a state with increased disorder and less available energy for work.

What is an example of low entropy and high entropy?

A shuffled deck of cards represents high entropy, as the order is random and unpredictable. In contrast, a neatly stacked and sorted deck has low entropy since the arrangement is ordered and easily predictable.

What does increased entropy mean?

Increased entropy signifies a rise in disorder, randomness, or uncertainty within a system. In information theory, it suggests a greater complexity or unpredictability in data. In thermodynamics, increased entropy implies a state where energy becomes more dispersed and less available for useful work, indicating a move towards greater disorder. Essentially, it represents a departure from ordered or organized arrangements towards more chaotic states.